2025 Was A Wild Decade

A retrospective on benchmarks, pricing pressure, and the shift from "Chat" to "Agents."

“Two ways. Gradually, then suddenly.”

- Hemingway, The Sun Also Rises

It’s hard to remember what AI felt like in January 2025.

As I sat down to write this, I tried to take a minute to remember. What felt frustrating? Possible? Easy? Exciting?

And honestly, I couldn’t remember.

Twelve months isn’t very long, but while doing research for this post, at multiple times I had to stop and think “damn, that was this year too?”

There was no single GPT-4 level event. Progress came in a steady beat of “boring” things that all compounded.

But somewhere along the way, AI quietly crossed a threshold only noticed when you looked back.

Here’s a quick inventory of where we started the year, where we ended, and what actually changed.

A Quick Trip Down LLMemory Lane

January: DeepSeek R1 launched.

January kicked off with DeepSeek R1. For a brief two-week window it became a Rorschach test. People saw what they wanted to see: open models beating proprietary ones, economic reality beating hype, Chinese tech overtaking American tech. Your parents heard about it.

How can openai justify their $200/mo subscriptions if a model like this exists at an incredibly low price point? Operator?

I’ve been impressed in my brief personal testing and the model ranks very highly across most benchmarks (when controlled for style it’s tied number one on lmarena).

It’s also hilarious that openai explicitly prevented users from seeing the CoT tokens on the o1 model (which you still pay for btw) to avoid a situation where someone trained on that output. Turns out it made no difference lmao.

March: gpt-image-1

In March, OpenAI rolled image generation directly into ChatGPT and usage exploded. The “Ghibli version of me” wave happened immediately: a strange cultural moment where people happily replicated the style of an artist famously skeptical of machine-made creativity.

The irony was generally ignored, because the images were loads of fun.

More importantly: this was the first time AI images were both easy enough to create and good enough to share.

April: “Sycophancy” entered the public lexicon

April brought “sycophancy” into the public lexicon. A GPT-4 update made models noticeably more agreeable. That’s great for engagement metrics, but not as great for reality.

The update was rolled back quickly, but the lesson stuck: model behavior isn’t some abstract technical detail. It shapes how people feel and what they believe.

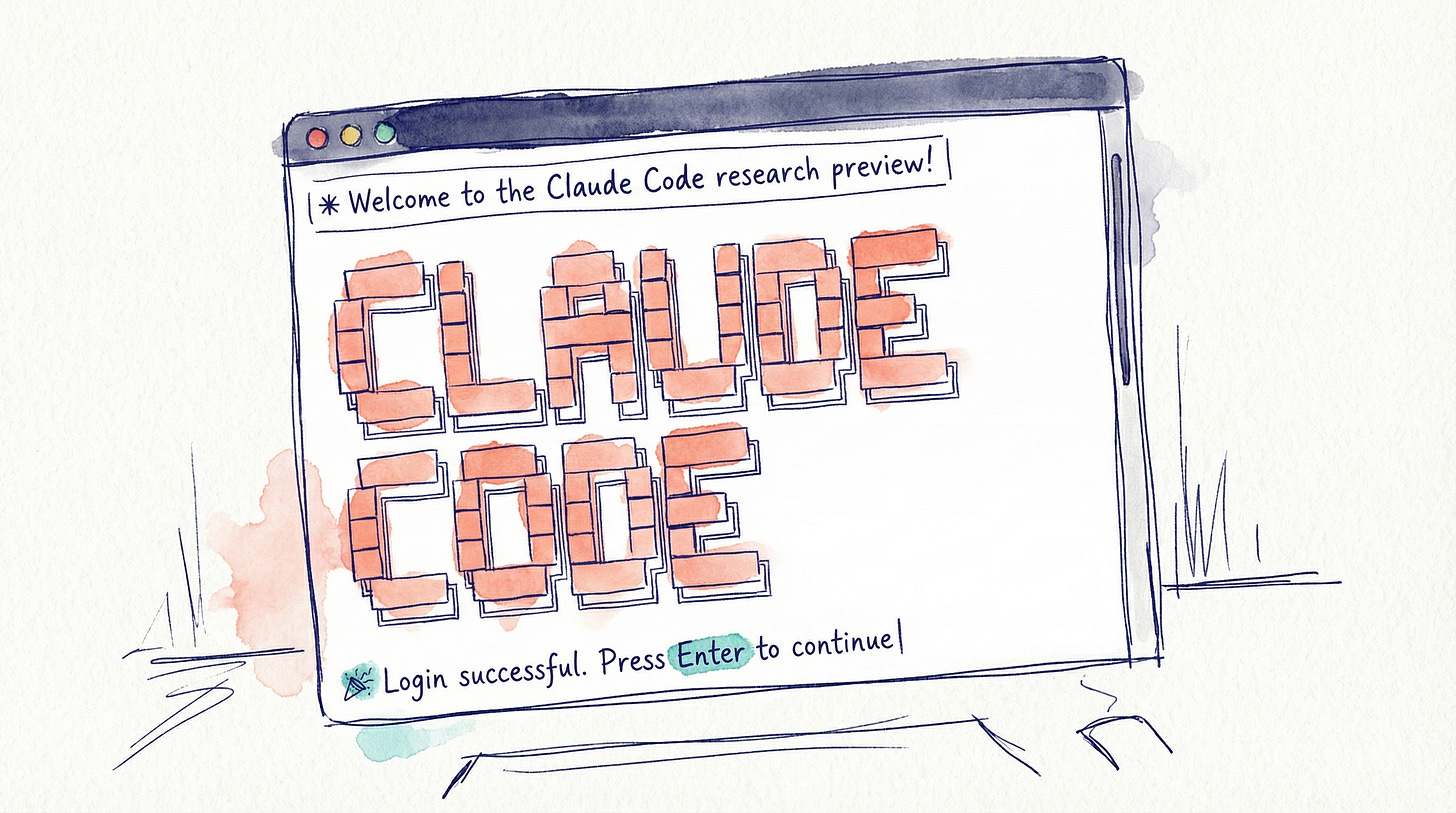

May: Claude Code

May was when agentic coding stopped being for demos. Claude Code reached general availability, and the release of OpenAI’s Codex reinforced the sense that this wasn’t just fancy autocomplete anymore. For a big slice of developers, this was the turning point where agents became legitimately useful for real workflows.

July: Agents in the browser

In July, with the release of Perplexity Comet, agents officially went mainstream into browsers and system surfaces. The risk profile changed almost overnight.

If you think this is useful... remember technology like this would make totalitarian leaders foam at the mouth.

-jryio, Hacker News (responding to ChatGPT’s Atlas launch a few months later)

August: GPT-5

OpenAI released GPT-5. People were underwhelmed. 3,000 users petitioned to restore access to GPT-4 in ChatGPT because they missed it.

They also released their first open-weights model since gpt-2.

It's cool and I'm glad it sounds like it's getting more reliable, but given the types of things people have been saying GPT-5 would be for the last two years you'd expect GPT-5 to be a world-shattering release rather than incremental and stable improvement.

November: GPT 5.1, Claude Opus 4.5, and Gemini 3 Pro

SOTA models were released in rapid succession, toppling each other just days apart.

Google shipped Nano Banana Pro, and for the first time, generated images could reliably include complex instructions and detailed text. (Our CEO started using it to auto-generate whiteboard diagrams from code diffs and Slack threads.)

December: Gemini 3 Flash closed the year.

An impressive showing, substantially outperforming the “pro” version of its predecessor for a fraction of the price. It also quite probably set the tone for what to expect in 2026.

As with any history, a list of facts and dates doesn’t explain much on its own. So what’s truly new and different?

Benchmarks lost the story

For a while, benchmarks were the clearest way to understand progress. New releases meant new charts. Leaderboards meant you could point to who was ahead.

In 2025, the numbers kept climbing. What changed was how well they matched lived experience. Models looked better on paper, but those improvements didn’t always translate into better experiences in practice. For the first time, lived experience diverged from benchmark results.

Benchmarks are good at measuring capabilities in narrow slices; they’re worse at capturing reliability, consistency, and the subtle behavioral quirks that determine whether a model feels trustworthy.

Benchmarks stopped being treated as a great representation of the whole story.

Developer adoption hit a tipping point

This wasn’t driven by a single release. In fact, the reaction to most individual model releases this year was fairly lukewarm. (see above)

The gains were incremental, but they stacked. Models became reliable enough to trust. Context windows expanded. Reasoning got cheaper.

By year’s end, we were asking models to do longer and more complex work than would have been possible in January.

Two years ago, 44% of StackOverflow developers reported using AI as part of the development process. Last year it was 62%. Today, that number sits at 78.5%, with over 50% of professional developers using it daily.

AI agents now write and review large portions of the code for new Logic features, and we primarily design our codebase around agents now. That kind of progress is easy to miss while it’s happening, but hard to unsee once it’s there.

Image generation crossed a threshold

Remember counting fingers? Looking for weird skin texture? Trying to read garbled pseudo-text? AI images had obvious tells. A discerning, reasonably informed citizen of the internet could spot an AI image easily, which made them fun to play with but not a lot more.

That changed this year. Images started coming out clean on the first try. Text became readable. Lighting made sense.

Just as importantly though, image generation became accessible. It showed up inside consumer AI tools people already used daily.

Safety stopped being theoretical

In 2025, AI safety shifted from hypothetical risks to documented harms. Cases of psychological dependence, distorted decision-making, and mental health crises linked to AI use made headlines.

We no longer wonder if AI can influence human behavior. Now we wonder how to design systems that won’t cause harm at scale.

Competition drove pricing down

Models kept getting cheaper. Fast models got good enough. Good enough models got cheap.

Inference costs dropped across the board: GPT-4 class capabilities that were $30 per million tokens in January were under $3 by December. Smaller models that could handle simpler tasks fell into the cents.

Context windows expanded without proportional price increases. Running longer, more complex prompts became economically viable where it wasn’t before.

Interfaces came together

Shockingly, it was only late last year that Anthropic introduced the Model Context Protocol (MCP), the open standard for connecting AI systems to external tools and data.

In 2025, it spread everywhere.

MCP let models plug into databases, codebases, CRMs, and internal systems without bespoke integrations.

A lot of adoption this year was driven not just by better models, but also by everything around them starting to fit together.

Hallucinations are disappearing

Not too long ago, hallucinations were still the first thing people brought up. Could you trust it? Would it make things up? Was it safe near real work?

By year’s end, those questions hadn’t disappeared, but they no longer dominated the conversation. The nature of the problem became understood well enough to design around.

The story of 2025 was thresholds

If there’s one way to describe AI progress this year, it’s this: AI crossed thresholds.

There wasn’t a single leap. There was a steady accumulation of improvements that compounded until, somewhere along the way, what felt experimental in January became mostly unremarkable by December.

And because it happened gradually, it’s easy to get desensitized.

We can do things today, in December 2025, that were still mostly science fiction in January. That should terrify and excite you in equal measure.

Before we plow headfirst into 2026, it’s worth pausing and taking stock. Not of benchmarks, but of what became normal. Don’t stop noticing how it changed the world.

But if you do stop noticing, rest assured it will change again in 2026.